Cosmic Dust was produced by myself and Christian Petersen for the ‘Skyward!’ visual arts exhibit at Seattle’s 2012 Bumbershoot Festival in Fisher Pavillion. Christian and I are working together as I Want You.

It started with the idea that we take a visual I did for a concert one year prior and convert it into an installation piece. Very little of the original code ended up getting used because massive revisions and additions were needed (I’ll get into specifics later). The

biggest change was integrating an XBox Kinect into the project somehow. The reason we used the XBox Kinect was not only because it provides easy user interaction, but it is also a newer technology that I am very interested and actively engaged in experimenting with.

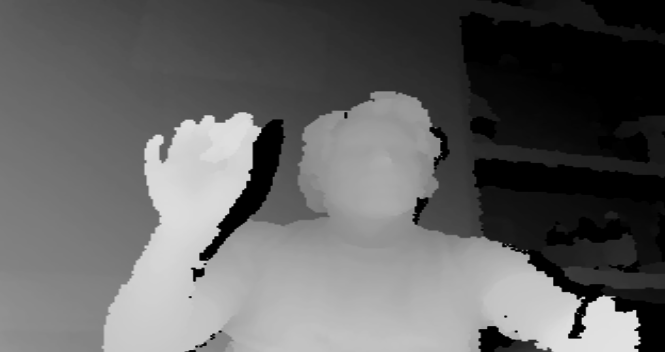

The project I started with creates something like a huge particle/’dust’ field floating through a static, flat gravitational field – you could think of it like marbles rolling around and settling on a lumpy, uneven floor. For Cosmic Dust we would be using a Kinect which generates a 3D point cloud, so I needed to take the project out of the 2D plane and get it into 3D-space – now, instead of marbles on an uneven surface it would be like comets orbiting planets!

The project I started with creates something like a huge particle/’dust’ field floating through a static, flat gravitational field – you could think of it like marbles rolling around and settling on a lumpy, uneven floor. For Cosmic Dust we would be using a Kinect which generates a 3D point cloud, so I needed to take the project out of the 2D plane and get it into 3D-space – now, instead of marbles on an uneven surface it would be like comets orbiting planets!

Interestingly, getting the Microsoft XBox branded Kinect to work from a programming standpoint is something like 100 times easier if you are working with a Mac and use processing than if you have a PC and want to use the MS Kinect SDK or one of the other options.

Interestingly, getting the Microsoft XBox branded Kinect to work from a programming standpoint is something like 100 times easier if you are working with a Mac and use processing than if you have a PC and want to use the MS Kinect SDK or one of the other options.

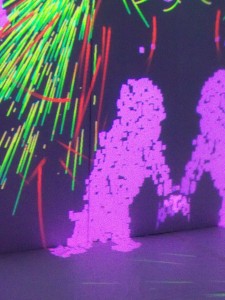

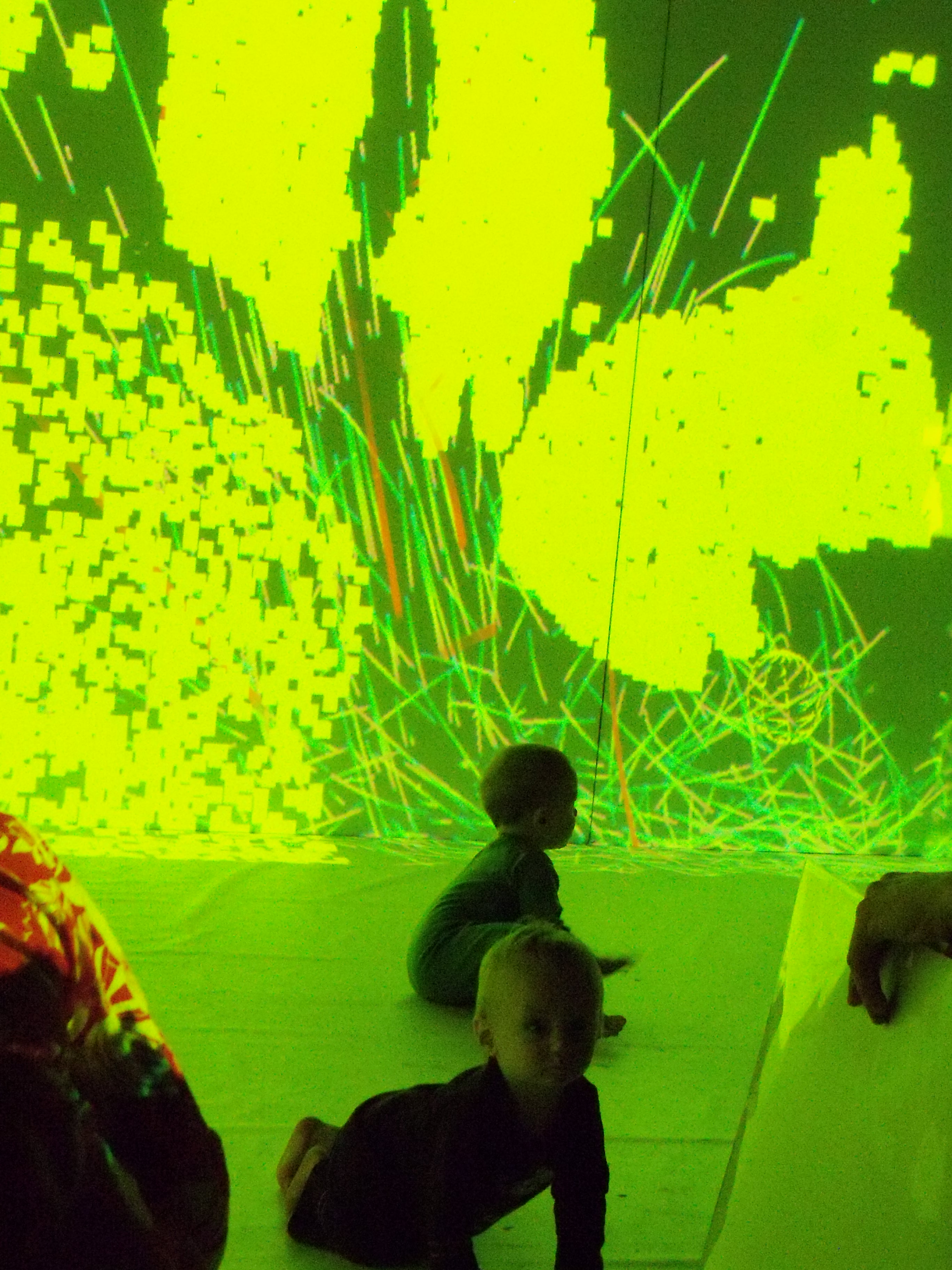

Anyway, the Kinect gets the depth field of whatever is in front of it, in the case of Cosmic Dust it is a large room at some times filled with people. When people are in the room their silhouettes are illuminated in a shimmering rainbow of 3D squares. As people walk around the room bright red streamers descend out of their silhouettes into the orbiting motion of the particles and the spheroid ‘planets’ below. Two projectors each for each half of the room create a mirroring of the images created by the software.

On the floor of the room a separate projection is cast which has only the background orbital motion and colorful, shimmering text reading, “i want you. art and design.”

We had five projectors, two computers (one mac mini, and one windows laptop), a microphone, a pre-amp, and an XBox Kinect running the installation. I really need to give a shout out to Bumbershoot and Avidex for making that happen. They not only provided five high end projectors for us, they also went to the trouble of installing them on the ceiling for us – Thank you so much!! We couldn’t have done it without you!

Another big thanks goes to Shelly Leavens and Jana Brevick who curated ‘Skyward!’, the visual arts exhibit that we were invited to be a part of.

Now, for the Hot Deetz! Programmatically, I had some interesting problems with this piece but it came together well in the end. One of my biggest concerns was how to keep a completely software-rendered java-based 3D application running in near-realtime. Processing has OpenGL options for those of us who can wheel and deal but the library I used to get Kinect support, Daniel Shiffman‘s OpenKinect for Processing, doesn’t play very nicely with that renderer, so it was off the table. As such, I couldn’t count on GPU code to keep everything running fast and many adjustments (and corner-cutting improvements) had to be made to get the app to behave like it did before it was 3D.

Shiffman’s Kinect library’s simplicity is it’s true grace. Aside from being able to get started from scratch with an XBox Kinect in about 30 seconds it can leave you wanting. The library offers little to grab user ‘skeleton’, other tracking info, OpenGL rendering support or sound from the microphones on the device. It also appears to have a memory leak that causes Java to slowly eat away at your system resources until it starves itself and crashes (after about 4.75 hours on my system).

In order to visualize people with the sensor using this library I used a technique which is roughly approximate to a common computer vision algorithm called background subtraction. You can think of it as using the XBox Kinect to create a mold of the empty room and then comparing that mold to the shape of the room when there are people are in it. When you subtract the mold you get only the people!

A big issue I had to deal with was user feedback. I knew that I wanted particles to be somehow generated by the people wandering through the installation but it took a little consideration about how to present the interactivity to the people/users so that people would have at very least the comprehension that they were in control of what they were seeing. After some testing we found we had the best results when each person generated a silhouette in addition to the particles. This way it is far easier to mentally connect the dots between what you are seeing on the walls and what you are doing.

In fact, it is really interesting to watch people come in and get their first impressions of Cosmic Dust because almost all of them know right away that it is a projection of themselves on the walls but the people generally have no idea at first how or what is putting their figure there. The ways that people attempt to figure out where they have to stand and which figure is theirs is very amusing.

Beyond it’s spatial interaction, the piece is also sound-reactive although this played a much smaller part in it than I would have liked. It would have been nice to have used one or all of the four on-board mics that the Kinect has but as far as I can tell this feature is poorly supported if at all by the code library I used.

Trying to use a cheap standard 1/8 inch-jack microphone with a mac mini is annoying. Every single mic I plugged into the line-in jack had severe gain problems to the point where the signal was non-existent. Apple’s apparent non-compliance with easily available hardware is frustrating. To resolve this issue I had to use a spare portable mixing board I have as a pre-amp for the microphone. The positioning of the mic probably could have been better, maybe the levels were too low, or perhaps everyone was more interested in the shapes that they could make, but I think few people noticed that the piece reacts to sound.

All-in-all Cosmic Dust was a huge success with virtually everyone. There were people of all ages enjoying the piece, from infants barely able to stand to folks as old as could make it through the gates! We had so many people coming through that we often had a line forming outside of our space. Cosmic Dust received a great deal of attention from the press too with write-ups and photos in The Seattle Times, City Arts, and Seattle Weekly. We even made the front page of the online version of The Times for a little while and subsequently landed in color on the front of the “NWTuesday” page in print! Christian also created a .gif animation on GIF LORDS for Cosmic Dust.

Altogether, it’s inarguable that Cosmic Dust at Bumbershoot 2012 has been my most successful project to date and nothing short of a huge feather in the cap for ‘I Want You’. I’m really excited to be off to such a great running start!